Hello,

I’m learning how to “inflate” meshes with kangaroo physics but am running into crashing/ freezing issues. I would like to know if there are possible hardware or RAM limitations that could be effecting this. I would like to be able to work with higher res meshes if possible so that I can CNC mill the results without noticeable facets in the surface. I would appreciate any advice on this.

thanks

Michael

020619 inflate test.3dm (3.2 MB)

inflate.gh (14.7 KB)

Is there a reason you’re not using Kangaroo 2?

Just that the tutorials that i found to get me to this point were all for kangaroo 1. I’m really new at this.

Does kangaroo 2 use resources better?

K2 is far better and has the same type of example files + more.

Kangaroo 2 should use your computing resources better - it is multi-threaded, and uses a different technique for the solver, allowing it to converge in fewer iterations.

Here’s the equivalent setup for your file:

catenary_subdivide.gh (12.6 KB)

Also, I think the best way forward is not to use higher res meshes for the simulation, but to subdivide using Weaverbird after Kangaroo - this way you can get a very fine and smooth mesh without excessive simulation time.

4 Likes

Thanks Michael, Where would I find the example files?

Thank you very much Daniel,

Unfortunately I cant seem to get your setup to work. I have kangaroo2 and weaverbird installed but the result stays flat. I’m guessing the node for vertex loads is the analog for K1 gravity? do I need a strength value attached? also what is the “show” node doing?

also, I’m wondering if my computer is limited somehow. with Kangaroo1, My machine hangs for 30 seconds or so when I set the mesh. the simulation is achieved in a few seconds, but then if I do anything else, even as simple as clicking on the grasshopper canvass, the software will freeze. I also found that it wont manage very well with more than 30,000 polygons with grasshopper running, but with rhino I can easily handle up to 2million+ polygons.

My computer is fairly old but it has twin 6 core xeons with a quadro fx3800 vcard.

thanks again.

Michael

You need to double click the Toggle connected to the ‘On’ input to start the simulation.

Yes, the Vertex Loads component is applying gravity. No you don’t need to attach anything - everything in this definition has all inputs already set.

Forget Kangaroo 1. There shouldn’t be any need to use it any more.

With Kangaroo 2 there will still be a pause when a new simulation initialises - this is Kangaroo checking the connectivity of all the goals, and if there are tens of thousands of points it might still take a while, though faster than the old version.

Don’t expect Grasshopper calculations or simulation to operate at the same speed as Rhino just displaying a mesh for the same number of polygons.

The hardware factors that seem to make the biggest difference to Grasshopper and Kangaroo performance are single core speed and RAM.

Thanks so much! So very helpful. Can you recommend a good amount of RAM? Looks like I have 12GB - thought i had more. do you think upgrading my ram would help?

Gh and Rhino depend mostly on cpu for computing. Ram for memory. More Ram won’t help you much. A faster / better cpu will (better per core not overall, that is important to note)

I’m not sure that’s true - see David’s reply here (also noting that is from 7 years ago - I think 32GB of ram is not unreasonable these days). I’ve not done thorough tests of the effects of RAM in isolation, and I’m no expert on this, but my impression was that it can make quite a difference to Grasshopper performance. Once with a new machine I realised I didn’t have my RAM set to the full speed, and when I changed it from 1333 to 2133 the difference was noticeable.

I have 32 also, but you can’t just update RAM without also updating the CPU to keep up with it. Its important that all the parts (RAM, CPU, GPU) are coordinated. If one is a bottleneck they all become a bottle neck. CPU will def make things faster and the RAM may or may not be able to store, if the process fills 12gb quick then yea that is an issue. My thought on suggesting CPU was the fact that Kangaroo 2 is iterative and mutli-threaded, if it is iterating it isn’t really storing so much is it? I thought it is more calculating the data, using it for the next generation, and dumping the old data. Well you would know better than me what K2 is doing

Thanks for all this. its really enlightening. I didnt realize that the ghz rating could be a total of the cores not per core… dissapointing. Based on what you guys are saying, I’m thinking that my issue is more processor than RAM. Please let me know if you think my theory is good.

My configuration:

intel Xeon dual 6 core 3.33ghz. (does this mean only .555ghz per thread?)

12GB RAM (i dont know the speed)

Nvidia quadro FX3800

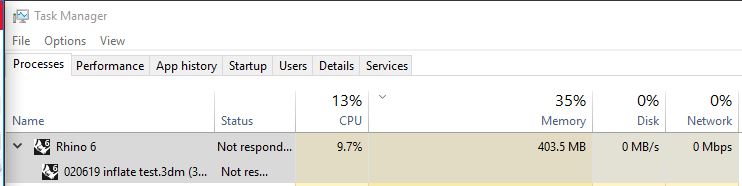

attached is a snapshot of the task manager during the processing of the

I guess the way i understand this is that grasshopper is maxing out 1 of 12 cores which would be 8.33% of the cpu and rhino is probly using another core to make up the rest of the 9.7%? its only using 403Mb of Ram so that doesnt seem to be a bottleneck. I ran it with the Kangaroo 2 solver as well but i didn’t see a difference. Is there something i need to do to allow threading?

thanks again

Michael

Sadly multi-threading doesn’t mean all cores will be maxed out all the time, or that the speed will correspond linearly to the number of cores, even if the code was completely independent parts each running on one core. Also only parts of the process are possible to make parallel, since everything needs to be recombined at some point.

It also might not make sense to focus too much on hardware here, when there are probably much bigger gains to be had by changing the definition setup. For instance - have you tried seeing how low a resolution mesh you can use for the simulation (subdividing after to make it smooth as needed)? I think you might be surprised how little difference it makes to the shape.

If the main issue is that pause when the simulation initialises, there could be room for improvement here too. This initialisation step is designed for flexibility when adding many different goals without the solver needing any knowledge about the topology of what they come from, but in a case like here where they all act on one mesh, there might be a way to skip some of the connectivity searching.

2 Likes

For instance - this is a side-by-side comparison of the surface meshed with around 5000 vertices and around 25000 (as in your original file) - with one extra level of subdivision applied to the coarser one after relaxation.

No, each thread can hit 3.33ghz, but it’s not that simple. The CPU may boost if needed and therefore be able to go above that limit (if it has this feature). It also may throttle back depending on temperatures and sit below that limit (especially if you’re using a stock cooling option for your CPU).

That 3.33 Ghz number is your single core performance, and generally speaking, 3d modeling programs are more dependent on single-core performance.

catenary_subdivide_script.gh (87.6 KB)

Here’s a short scripted version that does the same thing as your definition, but getting the indexing of the particles for the solver directly from the mesh as it creates the goals.

Daniel,

I tried to remesh the original mesh, but it keeps outputting an invalid mesh. Do you know why it’s doing this?

catenary_remesh.gh (1.7 MB)